W.J. Astore

Watch out if the robots and computers copy their human creators

Robot dogs as potential enforcers. AI chatbots that write scripts, craft songs, and compose legal briefs. Computers and cameras everywhere, all networked, all connected, all watching—and possibly learning?

Artificial intelligence (AI) is all the rage as science fiction increasingly becomes science fact. I grew up reading and watching Sci-Fi, and the lessons of the genre about AI are not always positive.

To choose three TV shows/movies that I’m very familiar with:

- Star Trek: The Ultimate Computer: In this episode of the classic 1960s TV series, a computer is put in charge of the ship, replacing its human crew. The computer, programmed to think for itself while also replicating the priorities and personality of its human creator, attempts to destroy four other human-crewed starships in its own quest for survival before Captain Kirk and crew are able to outwit and unplug it.

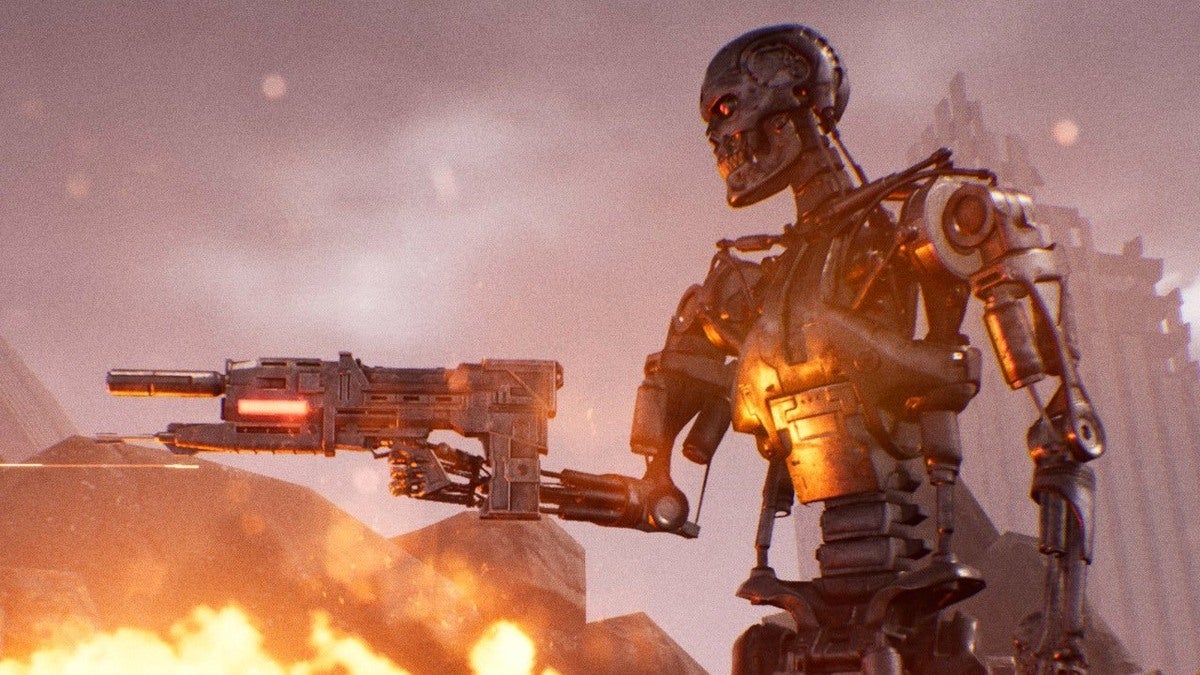

- The Terminator: In this 1980s movie, a robot-assassin is sent from the future to kill the mother of its human nemesis, thereby ensuring the survival of Skynet, a sophisticated AI network created by the U.S. military that gains consciousness and decides to eliminate its human creators. Many sequels!

- The Matrix: In this 1990s movie, the protagonist, Neo, discovers his world is an illusion, a computer simulation, and that humans are being used as batteries, as power sources, for a world-dominating AI computer matrix. Many sequels!

Sci-Fi books and movies have been warning us for decades that AI networks may be more than we humans can handle. Just think of HAL from Stanley Kubrick’s 2001: A Space Odyssey. Computers and droids of the future may not be like R2-D2 and C-3PO from Star Wars, loyal servants to their human creators.

As they say, it’s only a movie, but I do worry about too much hype about AI. If AI becomes a reflection of its human creators, especially a distorted one, we could have much to worry about.

Assuming computers could truly learn from their human creators, it makes sense they would act like us, pursuing violence and issuing death sentences in the name of AI’s security and progress.

To AI networks of the future, linked to robotic enforcer dogs and armed aerial drones, humans just might be the “terrorists.”